Potential Outcomes Model

June 05, 2020

Hey! This post references terminology and material covered in previous blog posts. If any concept below is new to you, I strongly suggest you check out its corresponding posts.

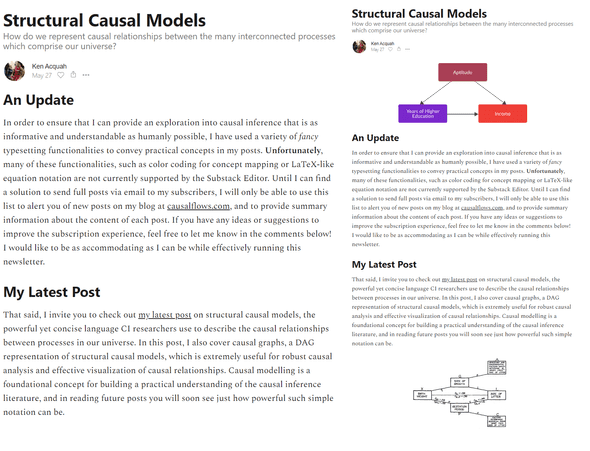

Structural Causal Models

May 27, 2020How do we represent causal relationships between the many interconnected processes which comprise our universe?

Getting In To A Causal Flow

May 20, 2020What is causal inference? Why is it useful? How can you use to amplify your decision-making capabilities?

In my previous post, I discussed a powerful notation for describing hypothesized causal effects between explanatory variables corresponding to a cause, outcome variables corresponding to an effect, and unobserved variables which also correspond to a cause, but are not measured in our dataset. While this notation, structural causal models, are a useful language for describing the existence or absence of causal relationships, it cannot easily describe the quantitative causal hypotheses we are generally interested in analyzing.

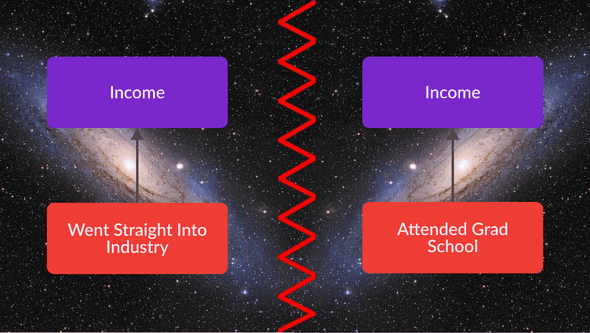

In contemporary English, we commonly describe these hypotheses as counterfactual queries, asking questions such as “How much would I earn if I had chosen to attend graduate school?” or “Can I get more of my email subscribers to read a blog post if I include images in its corresponding email alert?”. These queries are an effective way to describe causal effects because they require us to imagine a hypothetical universe which is only different in the absence or presence of a particular cause. For example, when I question what my income would be had I attended graduate school, I am imagining my income in a hypothetical universe in which I attended graduate school, instead of working out of undergrad. Measuring the differential between this hypothetical universe and ours allows us to isolate the effect this cause has on other variables in our universe and to quantify the extent to which the presence of this cause generates particular observed outcomes.

Counterfactual questions are generally answered by analyzing data with some variation in the explanatory variable which defines two seperate hypothetical universes. For example, If I wanted to estimate the extent to which adding images to my email alerts encourages Causal Flows email subscribers to open my blog posts, I will need to observe data that tracks which of my subscribers opened my blog post during a time in which some subscribers received images in their email alerts and some subscribers did not.

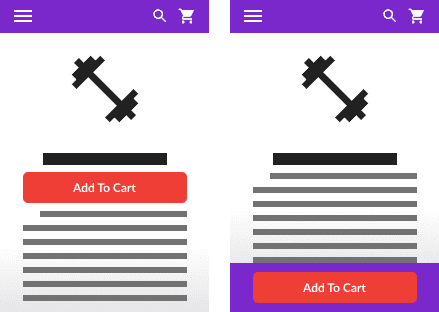

Potential Outcomes

These counterfactual queries often concern potential outcomes or hypotheses describing the values of outcome variables in the hypothetical universes for which certain explanatory variables have particular values. For example, consider the question: “Can I get one of my email subscribers to read a blog post if I include images in its corresponding email alert?”. Perhaps, I am interested in changing my email alerts to resemble the version displayed on the right of figure 2 in order to get more of my subscribers to click the hyperlink to my latest post. In this example, the explanatory variable is Images In Email Alert () and the outcome variable is Opens Blog Post (). I am interested in whether or not individual email subscriber opens my blog post after reading my email alert, given that it contains images, and given that it does not. As a reminder, the structural causal model describing the hypothetical effect of the former on the latter is as follows:

And its corresponding causal graph is:

How can we quantify the degree of this hypothetical causal effect? How can we make an inference about the probability that adding an image to my newsletter will encourage an individual subscriber to open an email? Adding images will take a lot of effort, so I wouldn’t want to take the time to make the change if the resultant effect would just make a reader 1% more likely to open my newsletter. Statisticians Jerzey Neuman and Donald Rubin both formalized a model for investigating counterfactual queries commonly referred to as the potential outcomes model. The simplest version of this powerful model consists of four main concepts.

Indicator Variables

Indicator Variables are mathematical variables used to represent discrete events. Indicator variables take only the value 0 or 1 to indicate the presence or absence of a particular causal event. For example, the event that I include images in the email alert sent to a particular email subscriber can be represented by the following indicator variable.

In the potential outcomes model, an explanatory variable is often referred to as the treatment variable and the state for which an explanatory variable for a particular individual is commonly referred to as the treatment. This borrows from terminology commonly used in the medical sciences. When an explanatory indicator variable takes the value for individual , we commonly say that individual is treated, when an explanatory indicator variable takes the value for individual , we say that individual is untreated. For example, let’s suppose I were to send a portion of my email subscribers email alerts with images, and that I were to send another portion email alerts without images. Choosing to send email alerts with images to certain subscribers would be a treatment, those who received said email alerts would be treated, and those who receive email alerts without images would be untreated.

Potential Outcomes

Potential Outcomes are mathematical variables used to represent outcome variables in the two hypothetical universes we consider when asking a counterfactual question. We commonly represent these hypothetical universes using a superscript added to a particular outcome variable, representing the value of an explanatory variable in its corresponding hypothetical universe. With this notation, is the potential outcome observed when its corresponding explanatory variable and is the potential outcome observed when its corresponding explanatory variable

For example, when describing our counterfactual question concerning images in email alerts, potential outcomes take on the following values depending on whether or not individual opens a blog post, given the type of email alert they see. Note that the two potential outcomes in this simple version of the potential outcomes model are also indicator variables.

The two hypothetical universes described by these potential outcomes are the universe in which individual receives an email alert without images and the universe in which individual receives an email alert with images.

Treatment Effect

The treatment effect, or causal effect, is the differential between the two “hypothetical universes” we consider when asking our counterfactual question. Concisely, for a particular observed individual , it is the difference between the two potential outcomes (for which individual does receive treatment) and (for which individual does not receive treatment) . Utilizations of the potential outcomes model are largely concerned with estimating this crucial value, as this is the quantified level of the causal relationships contemplated endlessly by causal inference practitioners. If I had information about the treatment effect for each of my individual email subscribers I would have a precise measurement of the value of adding images to my email alerts, precisely the data I need to make an informed decision with regard to my proposed intervention.

Observable Outcomes

Observable outcomes are the outcomes which we eventually observe, and depend on whether or not we apply treatment to a particular individual. For a given outcome variable , and an explanatory indicator variable , the observable outcome for a particular individual is represented by the following switching equation.

For example, the observable outcome I witness when consider the effect that images in email alerts has on my blog post open rate is represented as follows:

Note that for treated individuals, who receive an email alert with images, is 1 and and thus I observe potential outcome . Similarly, for untreated individuals, who receive an email alert without images, is 1 and is 0 and thus I observe potential outcome .

In a typical causal inference setting, our data contains measurements of observable outcomes as well as the indicator explanatory variables describing which individuals were treated. However, the switching equation is just one equation, containing two unknown variables and , and is thus underspecified and cannot be solved. This problem is commonly known as the fundamental problem of causal inference. It is impossible to see both potential outcomes and at once, to observe the hypothetical universe for which individual is treated, and that for which individual is untreated. When I sent out the email alerts for this post, I could not observe a world in which I send an particular subscriber an email alert with images, as well as the world in which I send that same subscriber an alert without images. Thus, the fundamental problem of causal inference is unanswerable. Does that mean we just give up? Of course not!

Average Treatment Effects

While the effect of treatment on each observed individual can be valuable, often times analysts are fine with just estimating average treatment effects (ATE) which are the average of all treatment effects identified for all individuals. The formal equation for the ATE of a particular outcome variable is as follows.

Where the function is the expected value, or arithmetic mean of a given data series.

For example, consider the following (fake) dataset measuring which email subscribers will open my blog post after reading its corresponding email alert, given that there are images in the email alert and given that there is none . Note that we cannot possibly observe both columns of this data, as they each take place in two different hypothetical universes. However, such an example is useful for analysis of average treatment effects.

| 0 | 1 | 0 |

| 1 | 1 | 0 |

| 2 | 1 | 1 |

| 3 | 0 | 0 |

| 4 | 1 | 1 |

| 5 | 1 | 0 |

| 6 | 1 | 0 |

| 7 | 0 | 0 |

For these observations:

Ok, but our calculation used data of potential outcomes we could not observe, as individual email subscribers cannot both receive an email alert with images and receive one without. Can we calculate average treatment effects without running in to trouble with the fundamental problem of causal inference? Unfortunately not, we still do not have data measuring potential outcomes in the hypothetical universes for which each observed individual generates outcome and . We still can only see observable outcomes: the behavior of treated individuals when they are given treatment (which we can see because these are the individuals we treated) and the behavior of untreated individuals when they are not given treatment. With the help of a single calculation we can measure (and a few more which we cannot), we can achieve a robust estimate and even evaluate its statistical significance. Sorry to leave you hanging, but to keep this post at a preferred manageable length, discussion of ATE estimation will have to wait until my next post.

Why Do We Try To Estimate Average Treatment Effects?

Average treatment effect estimation with the potential outcomes model is a crucial tool for quantifying the causal effects of a proposed intervention. ATEs are used in a variety of disciplines to solve a variety of problems, beyond making decisions of whether or not to add images to blog post email alerts. In agile software development they are commonly used in the form of a technique known as A/B testing, or randomized experiments designed to estimate the effect of a particular change to a user interface. In medicine, ATEs are commonly estimated during drug trials in order to identify generalizable estimations of a particular therapy on a patient with a particular medical profile. In economics, ATE estimation is commonly used to discern the effect of a particular public policy decision on target populations, such as the effect that an increase in a high school’s class size can have on a student’s performance.

What If The “Average” Is Not Enough?

Estimating average treatment effects would be particularly helpful for informing my choice of whether or not to add images to my newsletter emails. As of now, Substack only allows me to send one email alert to all of my subscribers, so I’m not really concerned with how adding images to an alert affects a single subscriber. For causal inference questions concerning costly interventions, such as those regarding the purchase of costly digital ads targeted at a particular demographic, ATEs are not a sufficiently useful measurement to inform the design of a marketing campaign that is optimized for revenue. These decisions require a more granular estimation of how a particular treatment affects different sets of individuals, commonly referred to in causal inference literature as heterogeneous treatment effect estimation. Literature on machine learning techniques for heterogeneous treatment effect estimation provide many examples of practical causal inference tooling that is extremely valuable for effectively managing operating expenses. I will cover HTEs in detail in a future blog post.